The Big Video Sprint conference consisted of various keynotes, group discussions, data sessions and methods sprints. Conference participants explored new ways of collecting time-based records of "social, material and embodied practices as live-action events in real or virtual worlds".

The term "Big Video" was coined by professors Paul McIlvenny and Jacob Davidsen at Aalborg University as “an alternative to the hype about quantitative big data analytics”. The two professors work on an enhanced infrastructure for qualitative video analysis, which includes facets related to capturing, visualizing, sharing, as well as qualitative tools to support analysis (read more in their blog post).

The Big Video manifesto - how "big" should data be?

The conference program initiated with the organizers presenting their Manifesto for Big Video (“re-sensing video and audio”). Some points included in this manifesto are related to the non-neutral nature of recording technologies, the proliferation of advanced camera’s and microphones, the advent of online video archives (containing raw footage), and the quick rise of immense computing power. In the light of all these developments, data capturing and qualitative analysis should be rethought. For instance, multi-stream data makes it possible to analyze different layers of an event, thus better depicting the "plurality" of events. One may even “inhabit the data”, and create immersive environments to “stage” video data.

Later that day, McIlvenny and Davidsen demonstrated a custom-made tool to analyze and annotate 360 degrees video in a Virtual Reality (VR) environment (see Figure 1). Using an HTC Vive VR headset, they showed the possibilities of (re)playing immersive video, and adding annotations by drawing and voice-recording.

Keynotes and talks

During the three conference days, the keynote speakers introduced ethnocinema as method, a tool for multimodal analysis named ChronoViz, and Video and Art Probing.

Most of the participating researchers had a background in ethnomethodology & conversation analysis (EMCA), presenting and discussing the opportunities new capture methods bring to their research activities. Examples included observations in optician shops, in-passing interactions in hospitals, event participants' perspectives, and studying news production. Different presentations showed how various types of capture devices (e.g. 360 degrees video camera’s and spatial microphones) allowed for more (and better) research opportunities. Other perspectives were covered by presentations on functionality needs for video analysis tools, and on research infrastructure.

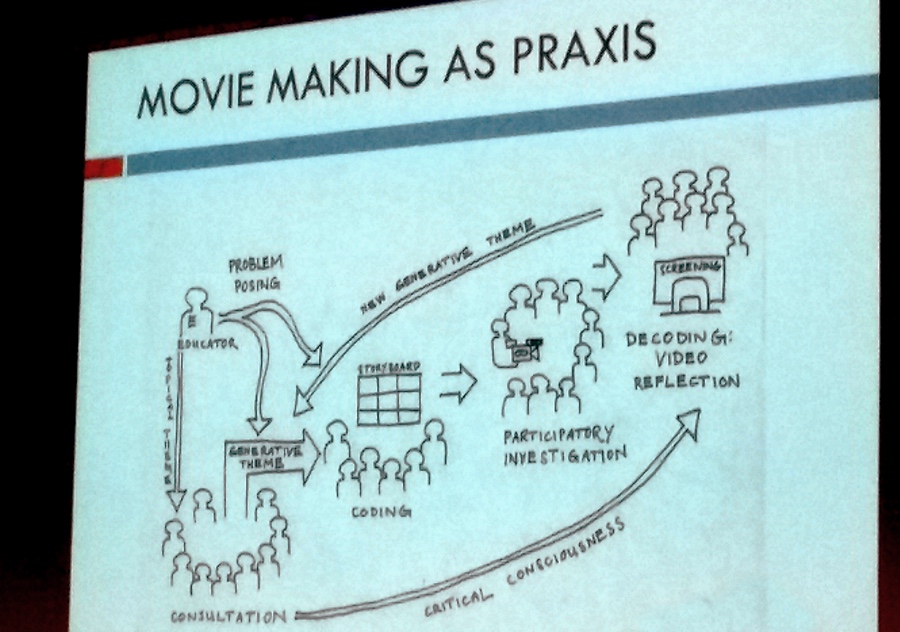

On a different note, Grady Walker, a researcher of the University of Reading demonstrated the value of movie making as praxis in critical pedagogy on the second day of the conference. Via video production, dissemination and analysis, youth in Nepal could examine the dominant narratives in their community (e.g. the pressure to conform to dominant norms), but at the same time also examine alternative life trajectories.

On the third day of the conference, we presented XIMPEL, the interactive media framework used for most of the touch table applications designed in the Visual Navigation Project (view presentation). We outlined the ten-year history and key characteristics of the framework, and showed distinctive examples of media applications created during the last ten years.

The conference also offered the valuable opportunity to discuss further video-based research cases, and other participants expressed interest in using XIMPEL for presenting some of their data in an immersive way. With respect to the UiO Science Library, we discussed how to provide links between the rapidly growing (streaming) video collections, as well as the wealth of resources in the regular collections of publications and books.

Inspiration for the Visual Navigation Project

The conference provided ample inspiration for further development and use of the XIMPEL framework, including the potential support for 360 degrees video. It also sparked further ideas for the Visual Navigation Project, as well as for a more generalized library setting. At the data capturing end, we learned more about the opportunities (and drawbacks) of advanced video recording devices, such as 3D and 360 degrees video cameras. With the large number of events taking place in current libraries in Norway, for instance in the UiO Science Library, exploring alternative recording methods can be useful.

Also, we learned more about novel analysis and interaction methods with these kinds of captures. For instance, the ability to "turn around" in 360 degrees video can be helpful when editing video created in complex settings (and makes it possible to chose focal points at the video editing stage). Moreover, the use of VR for immersive exploration and annotation of this video was an eye-opener. We would like to explore the usage possibilities of these kinds of technologies in a library setting, allowing for more immersive interactions with library materials.

Logg inn for å kommentere

Ikke UiO- eller Feide-bruker?

Opprett en WebID-bruker for å kommentere